From mapping hazard-prone urban areas in Tanzania to providing drought early warnings, disaster risk management professionals are finding new applications of machine learning (ML) at a rapid pace.

Highlighted by the UK government's Frontier Data Report as a technology with "vast potential" to support climate adaptation and the Sustainable Development Goals, ML technology now represents a global industry valued at $27 billion per year.

In disaster risk management, ML can help create actionable information faster and at lower cost: whether evaluating satellite imagery to determine flooded areas; processing street-level photography to identify structural characteristics of buildings; or assessing urban growth patterns to understand future vulnerabilities.

But amid the potential, what risks and challenges arise when a professional community embraces unfamiliar technologies? Together with our partners Deltares and the University of Toronto, the Global Facility for Disaster Reduction and Recovery (GFDRR) convened a working group that studied ethics, bias, and fairness concerns that arose in other fields from healthcare to law enforcement.

The working group's findings form the basis for a new report Responsible AI for Disaster Risk Management which recommends key steps to harness the benefits of machine learning while avoiding potential pitfalls.

Ethics and bias in ML: four challenges

A retail firm using ML guesses that a teenager is pregnant before her parents know.

A healthcare provider's algorithms systematically underestimate the care needs of black patients due to social bias in “training data.”

Both are real-life examples documented in studies of artificial intelligence (AI) ethics. Could similar types of challenges surface in disaster risk management? What should our professional community be doing now to avoid mistakes that were made in early-adopting sectors? Our working group identified four key concerns:

1. Bias

ML algorithms can learn to mimic and reproduce pre-existing human biases. Past experience shows examples where health expenditures, hiring decisions and even criminal sentencing recommendations have been shaped by algorithms that proved biased against minorities.

When an ML algorithm identifies 97% of large, wealthy houses in a satellite image but only 82% of a poor neighborhood's informally constructed residences, this is likewise an example of machine learning bias.

2. Privacy and security

ML techniques can uncover details about a person’s home, mobility patterns, socioeconomic status, and more. Due to the humanitarian nature of disaster risk management, privacy or security harms are often weighted against the values of openness and transparency, requiring project teams to address important questions of informed consent and privacy protection.

3. Transparency and explainability

Predictions made by machine learning systems are often difficult to explain, even for the developers of the system. This “black-box” problem can have serious implications: local expertise can be marginalized and public trust undermined. Engaging local communities in developing algorithms, and building them with explainability in mind, can help mitigate these risks.

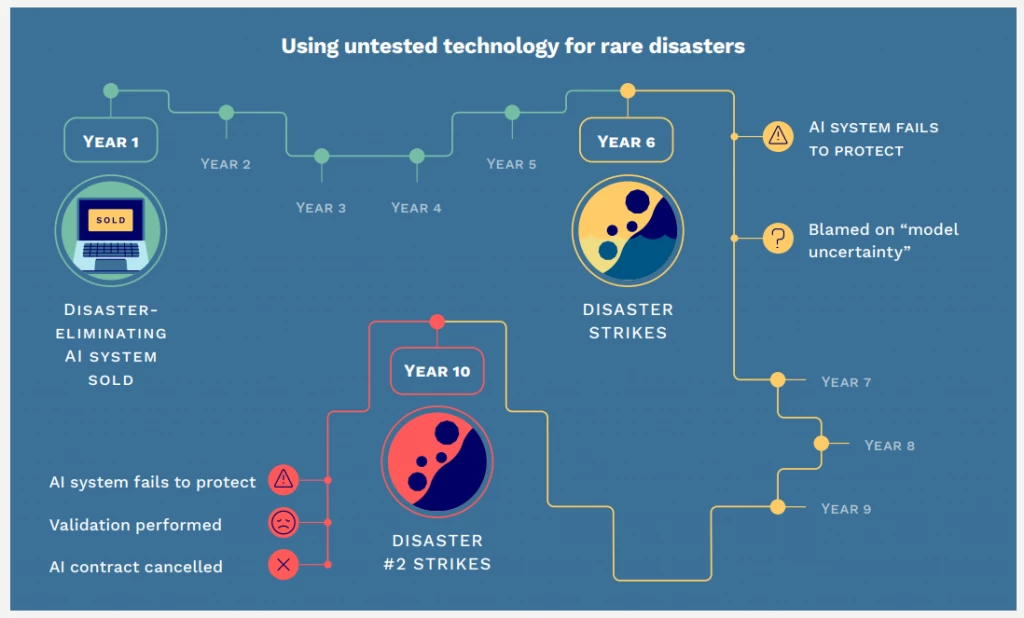

4. Hype

Hype and publicity surrounding AI can cause unrealistic expectations of what these technologies can do. People may focus their funding on novel or exciting approaches when traditional alternatives are equally effective , and hype-driven technologies could be deployed for extended time periods before limitations are realized. Guarding against "AI hype" is important to realizing long-term benefits.

Getting ahead of the curve

The working group developed a set of recommendations for promoting fair, just, and sustainable use of ML technologies in disaster risk management. They include:

- Proceed with caution, giving priority to alternative or traditional approaches if risks are significant or unclear.

- Draw on the experiences of other fields and domains.

- Work in a transparent fashion, collaborating with the communities and people represented in our technologies.

- Diversify project teams through broader backgrounds, skill sets, and life experiences.

- Remember that technology is never neutral. AI technologies inevitably encode preferences for some values, priorities, and interests. Failing to account for this can reinforce existing inequities and add to the vulnerabilities of already at-risk communities.

The good news is that for each concern identified in our report, practical actions can help mitigate the risks. These range from engaging local communities in creating the training data used by ML tools; auditing algorithms for social bias prior to deploying them; and prioritizing a new generation of “explainable AI” models designed to produce outcomes that humans can readily understand and trace back to origins.

Particularly when combined with the growing availability of Earth Observation data, ML is becoming a key tool to provide more timely, detailed, and accurate information that local stakeholders need to underpin their adaptation to a changing climate.

To realize its potential, it is important to maintain trust, keep communities in the driving seat, and get ahead of the ethical challenges that have beset other fields. As Eleanor Roosevelt wrote: "Learn from the mistakes of others. You can't live long enough to make them all yourself."

To download the report, click here. To learn more about Responsible AI for DRM and the working group, please contact opendri@gfdrr.org.

The Responsible AI for DRM authoring team consisted of Robert Soden (University of Toronto & GFDRR), Dennis Wagenaar (Deltares), and Annegien Tijssen (Deltares).

Related:

Demystifying machine learning for disaster risk management

https://blogs.worldbank.org/opendata/demystifying-machine-learning-disaster-risk-management

When community mapping meets artificial intelligence

The Open Cities AI Challenge

https://towardsdatascience.com/the-open-cities-ai-challenge-3d0b35a721cc

Join the Conversation